Developing an MCP Scenario with TypeScript: A production-ready reference implementation

MCP Microsoft Graph OAuth TypeScript AI Automation Development Microsoft 365 Security Software EngineeringIntroduction

This article presents a production-ready Model Context Protocol (MCP) scenario. Unlike other explanations that are all written in C#, this example is fully implemented in TypeScript. This reference implementation aims to provide a clearly understandable and practical solution that goes beyond simple Hello World examples; it aggregates best practices from numerous «how-to» guides, «getting started» guides, and documentation into a solid architecture. It’s an approach that leads from «knowing how it works» to «making it work».

The implementation is based on two separate basic concepts:

- a client setup that acts as an agent which uses the «AI Toolkit for TypeScript» by Vercel (from the creators of Next.js, the AI SDK is a free open-source library that gives you the tools you need to build AI-powered products) and instantiates the MCP client to communicate with the MCP server,

- as well as a server setup that has been implemented as a lightweight application which spins up the MCP server.

Important

For the sake of clarity, only the components relevant to the MCP setup are highlighted below.

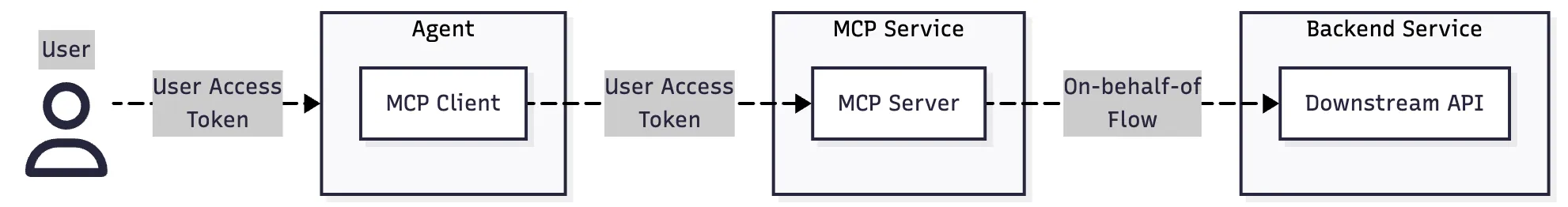

The user interacts with the agent (aka our actual AI application with access to an LLM), which establishes a secured communication (OAuth) to the MCP service (with the MCP server) via the MCP client. The user’s access token is transmitted from the client to the server, where it is validated and processed:

The MCP service can then access any downstream API – that is also secured with an OAuth token flow (via a client credentials flow) – from within its tools in the context of the user («on behalf of» flow, aka OBO). Although the OBO request is optional and must be fully managed by the MCP server’s service, it provides a secure and reliable approach to retrieve data from a backend API based on the access scope of the calling user.

Reference Implementation

Tip

Check out mymcp-typescript-sample reference implementation on GitHub (https://github.com/tmaestrini/mcp-typescript-sample) for detailed insights.

The following code snippets show only the core components of the server and client setup as well as the corresponding npm packages.

General Client setup

Let’s start with the client: the client can either act as a simple command line application (CLI) (as don in this reference implementation), but can also be flexibly adapted to real-world scenarios such as web applications with chat interfaces or supported background processes.

Basic Setup

After the user token has been retrieved from the request (as this is required for an authenticated request!), the client follows the basic task of establishing communication with the MCP server in the MCP setup. In addition to communicating with the MCP server, it provides the calling agent with the server tools:

// client/src/main.ts

import { client as MCPClientFactory } from './mcp/client';

// Get the user's OAuth token (from frontend app or CLI or ...)

const userToken = getUserTokenSomehow();

// Create a MCP client to handle communication with the remote MCP server

const client: MCPClient = await MCPClientFactory(userToken);

// List tools registered with the MCP to pass them to the agent

const toolset = await client.tools();

const tools = { ...toolset } // optionally remove "duplicated tools" with the same name

// Agent tasks: start handling user inputs and LLM interactions (scroll down for reference implementation)

await agentTasksLoop(tools);Instantiating the MCP client (MCPClientFactory)

In this implementation, the client function acts as MCPClientFactory. Therefore, it instantiates a new MCP client connection to the server and returns the instance to the caller. After configuring the HTTP transport (with the Bearer token in its header), the code creates an actual MCP client instance using createMCPClient(), passing the transport and giving the client a descriptive name demo-mcp-client. This name helps identify the client in server logs and debugging scenarios:

// client/src/mcp/client.ts

import { experimental_createMCPClient as createMCPClient, experimental_MCPClient as MCPClient} from 'ai';

import { StreamableHTTPClientTransport } from '@modelcontextprotocol/sdk/client/streamableHttp.js';

// this method acts as the MCPClientFactory:

export default async function client(userToken: string): Promise<MCPClient> {

// Connect to an MCP server and pass bearer token for authentication

const httpTransport = new StreamableHTTPClientTransport(

new URL(process.env.REMOTE_MCP_SERVER_BASE_URL || 'http://localhost:3000/mcp'),

{

requestInit: {

headers: {

'Authorization': `Bearer ${userToken}`

},

},

}

);

const client = await createMCPClient({

transport: httpTransport,

name: 'demo-mcp-client',

});

return client;

}Some notes on the snippet: the client function begins by declaring a MCPClient (from the ai module) that will hold the connection to an MCP server. The transport option uses StreamableHTTPClientTransport, which establishes an HTTP-based connection to the server.

Now, we come to an important point:

Note

The userToken variable (which is passed in from outside the method) would contain the actual authentication token – obtained through some prior authentication flow. That’s the first part of the auth flow in this MCP setup.

Handling user inputs and LLM interactions

In order to interact with the LLM and to instantiate the MCP client to communicate with the (remote) MCP server, we can rely on the «AI Toolkit for TypeScript» by Vercel and enable the LLM to make use of the (remote) server tools obtained before. BTW: the complex type annotation for the tools (in the method signature of agentTasksLoop) ensures proper TypeScript inference for the tools object returned by the MCP client.

First of all, the desired LLM provider must be instantiated in order to establish the binding to a LLM of your choice. In my reference implementation, this is done by creating an Ollama provider instance which points to a local Ollama server running on port 11434 (I’ve chosen the “qwen3:4b” model because it is a relatively lightweight 4-billion parameter model suitable for local execution)– but this could be any other cloud-hosted LLM binding (see https://ai-sdk.dev/providers/ai-sdk-providers; for instance with Open AI Provider openai('gpt-5‘) or Azure Open Ai provider azure('your-deepseek-r1-deployment-name')). Finally, start executing the response generation by calling the generateText function.

This pattern builds creating a powerful hybrid system that combines local AI processing with remote service capabilities. allows the local LLM to access external capabilities through MCP tools while maintaining conversational context. The LLM can decide when to call tools based on user requests and integrate the results into its responses,

// client/src/main.ts

import { createOllama } from 'ollama-ai-provider-v2';

import { openai } from '@ai-sdk/openai'; // 👈 alternative to OLLAMA

import { stepCountIs, streamText } from 'ai'; // 👈 «AI Toolkit for TypeScript» by Vercel

async function agentTasksLoop(tools: Awaited<ReturnType<MCPClient['tools']>>) {

// intentionally omitting fragments for educational purposes

// EXAMPLE: LLM binding to OLLAMA (local LLM)

// change the LLM setup according your choice

const ollama = createOllama({

baseURL: 'http://localhost:11434/api',

});

const ollamaModel = ollama('qwen3:4b');

const response = await generateText({

model: ollamaModel

tools,

stopWhen: stepCountIs(5),

messages: [{

role: 'user',

content: [{ type: 'text', text: 'Simply repeat this text.' }],

}],

});

/**

* CLOSING NOTE:

* Choose between generateText and streamText based on your application's needs:

* generateText: When you need the complete response for processing, logging, or when the user doesn't need to see incremental progress

* streamText: When building interactive UIs where you want to show text appearing in real-time, improving perceived performance and user experience

*/

// intentionally omitting fragments for educational purposes

}👉 Note on the generateText function. The call combines several key elements:

- Model binding: Uses the local Ollama model (or any other cloud-hosted model) for text generation

- Tool integration: Passes the MCP tools, enabling the LLM to call remote server functions

- Stop condition: Limits execution to 5 steps to prevent infinite loops (can also be less to «come to point» earlier)

- Message format: Follows the standard chat completion format with user/assistant roles (could also contain additional instructive

assistantmessages)

General Server setup

The main function of the server part in an MCP setup is to provide the tools. Every server implements a REST endpoint for handling the MCP requests from the client. The endpoint is designed as a POST route at /mcp that processes JSON-RPC requests:

// server/src/main.ts

import { server } from './mcp/server';

import { StreamableHTTPServerTransport } from '@modelcontextprotocol/sdk/server/streamableHttp.js';

import express, { Request, Response } from 'express';

import authorizeRequest from './auth/validation';

const app = express();

app.post('/mcp', authorizeRequest, async (req: Request, res: Response) => {

// Get the user's OAuth token from HTTP request (authentication context)

const token = req.headers.authorization?.replace('Bearer ', '') as string;

app.locals.authContext.setAuthContext({

token,

});

try {

const transport = new StreamableHTTPServerTransport({

sessionIdGenerator: undefined, // No session ID generator for stateless mode

enableJsonResponse: true,

});

res.on('close', () => {

transport.close();

});

await server.connect(transport);

await transport.handleRequest(req, res, req.body);

console.log('Received MCP request:', req.body?.method, req.body?.params);

} catch(err) {

// intentionally omitting

}

});Let’s focus first on the request itself: when a request arrives, the system first extracts the Bearer token from the Authorization header and store it in the application’s authentication context in order to make it available throughout the whole request lifecycle (and therefore within other server components). This authentication mechanism allows the MCP server to identify and authorize the requesting client.

Note

The Bearer token is extracted from the Authorization header – exactly where we would expect a token in any request that is secured by a valid access token.

Authenticated requests and token validation

In this reference implementation, an express middleware called authorizeRequest intercepts the request flow and (as the name tells) checks the validity of the token:

export default async function authorizeRequest(req: express.Request, res: express.Response, next: express.NextFunction) {

const token = req.headers.authorization?.replace('Bearer ', '') || req.headers['x-api-key'] as string;

if (!token) {

return res.status(401).json({

jsonrpc: '2.0',

error: {

code: -32001,

message: 'Authentication token required'

},

id: null

});

}

// Check for other authorization-related headers if needed

// Finally, validate the token (this is a placeholder, implement your own validation logic)

const tokenValidation = await isValidToken(token);

if (!tokenValidation.isValid) {

return res.status(401).json({

jsonrpc: '2.0',

error: {

code: -32003,

message: 'Invalid authentication token'

},

id: null

});

}

// If token is valid, proceed to the next middleware or route handler

next();

};Make sure that you always validate the token by verifying its validity (use jwt.verify) as well as doing a claim check by reading the scp value from the token payload; by reading the scope, you make sure that the requester (aka the authenticated user) has the appropriate rights to call this service (in my example: the user has requested the mcp:tools scope).

Note

To even restrict the usage of the server tools to specific tenants, you should implement a tenant check (by validating thetid value) in the authorization flow.

See reference implementation for further details: https://github.com/tmaestrini/mcp-typescript-sample/blob/0ee006ea76e9714a0a1970d064b0b9ff6b146bf9/server/src/auth/validation.ts#L57

Service connection lifecycle

For the sake of simplicity, the server is implemented in a stateless manner: for each incoming request, a new StreamableHTTPServerTransport instance is created. By avoiding shared transport state, the system ensures that responses are always routed to the correct client connection. The transport is configured without a session ID generator and with JSON response formatting enabled, reinforcing the stateless nature of the operation. Once created, the transport connects to the MCP server and processes the incoming request body. A cleanup mechanism is established through the response’s close event, ensuring that transport resources are properly released when the connection terminates.

The MCP server

For instructional purposes, the reference implementation the MCP Server integrates with Microsoft’s identity platform with an authenticated request to the Microsoft Graph API (downstream API). Therefore, the services acts as a «bridge» between MCP clients and Microsoft Graph, enabling access to tenant-specific user data through a well-structured, authenticated channel: the system is designed to handle secure, authenticated requests by leveraging Microsoft Azure’s On-Behalf-Of (OBO) token flow, which allows the server to act on behalf of the authenticated user who uses the agent. This architecture is particularly valuable in enterprise scenarios where applications need to access organizational data while preserving user identity and permissions.

Let’s focus first on the whole implementation before drilling down into details:

// server/src/mcp/server.ts

import { McpServer } from '@modelcontextprotocol/sdk/server/mcp.js';

import { OnBehalfOfCredential } from '@azure/identity';

import AuthenticatedApiClient from '../utils/api-client';

export const server = new McpServer({

name: 'ts-mcpserver',

version: '1.0.0'

});

server.registerTool(

'get all users in the user\'s tenant',

{

title: 'Secured Backend API from Microsoft Graph wito call all users in the user\'s tenant',

description: 'Calls a secured backend API using authentication via access token from Microsoft Entra ID. The response contains a list of all users in the user\'s tenant.',

inputSchema: { companyName: z.string().optional().describe('The company name associated with the tenant') },

outputSchema: { result: z.string(), userData: z.any().optional() }

},

async ({ companyName }) => {

try {

const userToken = authContext.Stateless.getAuthContext()?.token;

// Make OBO request to get access token for backend API on behalf of the user

const oboTokenCredential = new OnBehalfOfCredential({

tenantId: process.env.AZURE_TENANT_ID || '',

clientId: process.env.AZURE_CLIENT_ID || '',

clientSecret: process.env.AZURE_CLIENT_SECRET || '',

userAssertionToken: userToken || '',

});

// Call the secured Graph API to get all users in the tenant;

// the token is automatically included in auth context in the backendApiClient

const client = new AuthenticatedApiClient("https://graph.microsoft.com", {

scopes: ['https://graph.microsoft.com/.default'],

tokenCredential: oboTokenCredential,

})

const apiResponse = await client.get<any>('/v1.0/users');

const output = {

result: `Secured API call processed message: "${companyName}" with data: ${JSON.stringify(apiResponse?.value)}`,

userData: apiResponse.value

};

return {

content: [{ type: 'text', text: JSON.stringify(output, null, 2) }],

structuredContent: output

};

} catch (error) {

console.error('Error in secured API call:', error);

const errorMessage = error instanceof Error ? error.message : 'Unknown error occurred';

return {

content: [{ type: 'text', text: `Error: ${errorMessage}` }],

structuredContent: { result: 'API call failed', authenticated: false, error: errorMessage }

};

}

}

);Tool Registration

The MCP server registers a specialized tool called “get all users in the user’s tenant” with comprehensive metadata including title, description, and schema definitions for both input and output. For instructional purposes, the tool accepts an optional company name parameter and defines a structured output schema that includes the API result, authentication status, and user data. This registration pattern follows MCP conventions by providing clear tool capabilities and expected data structures, enabling clients to understand and interact with the tool effectively.

OBO Token Flow

To handle the request on behalf of the calling user, the system retrieves the user’s access token from the authentication context before it then creates an OnBehalfOfCredential instance configured with the application’s Azure tenant ID, client ID, and client secret (client credentials flow). This credential object uses the original user token to verify the user, allowing the server application to obtain a new access token that maintains the user’s identity while granting access to the Microsoft Graph API. This pattern makes sure that downstream API calls are made with the right user context and permissions by maintaining security boundaries while enabling service-to-service communication on the web.

Authenticated Requests to Downstream API

In this reference implementation, the tool utilizes a custom AuthenticatedApiClient to make secure requests to the Microsoft Graph API endpoint (this could be replaced by any other client library that handles API calls to the according downstream API). The client is configured with the Graph API base URL and appropriate scopes, automatically handling token inclusion and renewal through the OBO credential. The system makes a GET request to the /v1.0/users endpoint to retrieve all users in the tenant, with the response data being structured into a standardized format that includes both raw user data and metadata about the operation’s success.

Final notes

Architecture scalability

The modular separation of server setup and client setup enables high scalability: the MCP server can be scaled independently of the client instances (in order to handle peak loads). The use of Express as a lightweight framework ensures high-performance processing of HTTP requests, which is particularly advantageous for data-intensive applications. In addition, the architecture supports the integration of additional backend services via downstream APIs, allowing functionalities to be flexibly expanded without affecting the core application.

OAuth integration (token security)

Because the server requires a valid OAuth token (scope check, tenant check) from Microsoft Entra ID for every client request, security checks may be implemented at your discretion. This ensures that only authorised requests are processed, minimising potential attack vectors. The optional “on-behalf-of” flow for token generation further increases security when communicating with downstream backend services (downstream APIs), as the connection between the MCP service and backend requests is answered in the user context without directly passing on the user token (assuming the downstream API directly supports the OBO token flow).

Better make use of an AI toolkit

By using the «AI Toolkit for TypeScript» by Vercel, you can rely on a robust and very flexible abstraction that allows to integrate LLM providers of your choice, without having to change the core logic of the MCP setup. Particularly valuable is the native integration of tools and function calling, which allows language models to call (remote) MCP server tools while the toolkit automatically handles the complex mapping and other features that are crucial for production-ready agents (such as automatic stop conditions or conversation history management).

That’s it – what do you say: fool or cool? Have fun and keep coding! 😃

Links to further explanations or basics of MCP setups

- Damien Bowden: IMPLEMENT A SECURE MCP OAUTH DESKTOP CLIENT USING OAUTH AND ENTRA ID

see: https://damienbod.com/2025/10/16/implement-a-secure-mcp-oauth-desktop-client-using-oauth-and-entra-id/ - Cédric Mendelin: Model Context Protocol in .NET

see: https://medium.com/data-science-collective/model-context-protocol-in-net-06c6076b6385